Chapter 3 Locally dependent exponential random network models

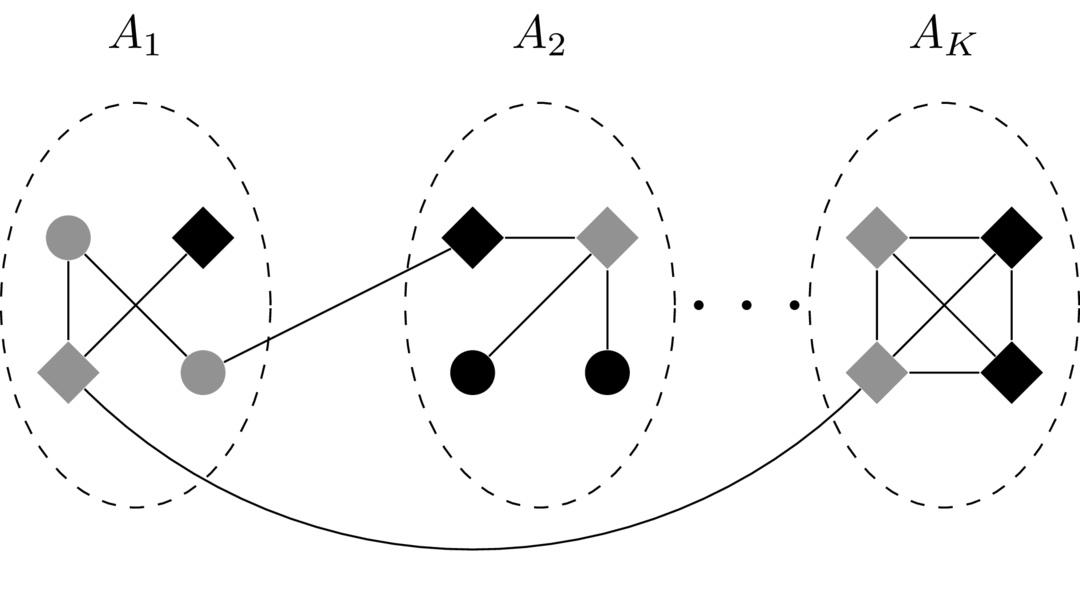

Figure 3.1: A locally dependent random network with neighborhoods and two binary node attributes, represented as gray or black and circle or diamond.

To begin, we define a random network, developed by Fellows & Handcock (2012). By way of motivation, note that in the ERGM the nodal variates are fixed and are included in the model as explanatory variables in making inferences about network structure. Furthermore, there is a class of models that we do not discuss here that consider the network as a fixed explanatory variable in modeling (random) nodal attributes. It is not difficult to come up with situations where a researcher would like to jointly model both the network and the node attributes. Thus we define a class of networks in which both the network structure and the attributes of the individual nodes are modeled as random quantities.

Roughly, this is the proportion of the total sample space that is possible with fixed. This is not, in general, equal to one, so the ERNM is not equal to the ERGM (Fellows & Handcock, 2012).

3.1 Definitions and notation

We will consistently refer to a set of nodes, , as the -th neighborhood, with an uppercase representing the total number of neighborhoods and a lowercase representing a specific neighborhood. The variable will refer to the domain of a random network, usually the union of a collection of neighborhoods. Nodes within the network will be indexed by the variables and , with , where is referring to the edge between nodes and , and and refer to the random vectors of node attributes. Abstracting this further, and will also refer to tuples of nodes, so we will write . The variables and will also often carry a subscript of or (for example ) which emphasizes that the edge from to is within or between neighborhoods, respectively. Finally, for lack of a better notation, the indicator function (where is for between) is one if and where , and zero otherwise.

3.2 Preliminary theorems

In proving our theorems, we will make use of several other central limit theorems, all of which can be found in Billingsley (1995). The first is the Lindeberg-Feller central limit theorem for triangular arrays. The second is Lyapounov’s condition, which gives a convenient way to show that the Lindeberg-Feller theorem holds. Finally, we make use of a central limit theorem for dependent random variables. For the sake of brevity, in this section we state each of these without proof.

The final theorem is Slutsky’s theorem, a classic result of asymptotic theory in statistics.

3.3 Consistency under sampling

With these in place, we attempt to extend a result about locally dependent ERGMs proven by Schweinberger & Handcock (2015) to locally dependent ERNMs. In short, this theorem states that the parameters estimated by modeling a small sample of a larger network can be generalized to the overall network. It was shown by Shalizi & Rinaldo (2013) that most useful formulations of ERGMs do not form projective exponential families in the sense that the distribution of a subgraph cannot be, in general, recovered by marginalizing the distribution of a larger graph with respect to the edge variables not included in the smaller graph. Hence, we are unable to generalize parameter estimates from the subnetwork to the total network.

To show that locally dependent ERNMs do form a projective family, let be a collection of sets , where each is a finite collection of neighborhoods. Also, allow the set to be an ideal, so that if , every subset of is also in and if , then . If , think of passing from the set to the set as taking a larger sample of the (possibly infinite) set of neighborhoods in the larger network. Then let be the collection of ERNMs with parameter indexed by the sets in . For each , let be the collection of ERNMs on the neighborhoods in with parameter where is open. Assume that each distribution in has the same support and that if and only if . Then, the exponential family is projective in the sense of Shalizi & Rinaldo (2013 Definition 1) precisely when Theorem 3.6 holds.

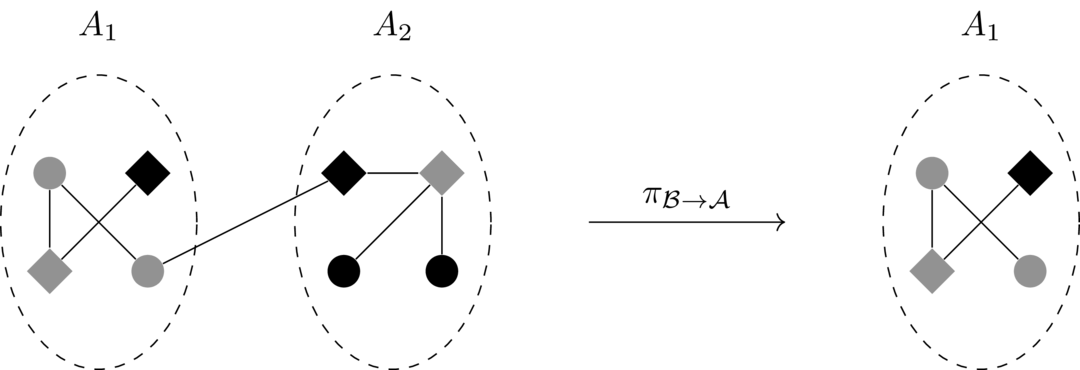

This follows from a specific case of the general definition given by Shalizi & Rinaldo (2013). There, for every pair and with , they define the natural projection mapping . Informally, this mapping projects the set down to by simply removing the extra data. For example if and as in Figure 3.1, then the mapping is shown in Figure 3.2.

Figure 3.2: The projection mapping from to .

This is desirable because Shalizi & Rinaldo (2013) have demonstrated the following theorem.

This is trivially achieved by setting for all values of and setting . We have differentiability of with respect to by a result from multivariable calculus that follows from Fubini’s theorem. From a practical perspective, this means that a researcher using this model can assume that parameters estimated from samples of a large network are increasingly good approximations for the true parameter values as the sample size increases.

3.4 Asymptotic normality of statistics

In this section we will prove that certain classes of statistics of locally dependent random networks are asymptotically normally distributed as the number of neighborhoods tends to infinity. The statistics we consider can be classified into three types: first, statistics which depend only on the graph structure; second, statistics that depend on both the graph and the nodal variates; and third, statistics that depend only on the nodal variates. The first class of statistics has already been considered by Schweinberger & Handcock (2015), but we will reproduce the proof here, as the second proof is very similar. The third class of statistics becomes normal in the limit by a central limit theorem for dependent random variables in Billingsley (1995).

Before we begin to explicitly define each of these classes, we clarify the notation that will be used. A general statistic will be a function where is the -fold Cartesian product of the set of nodes, , with itself:Additionally, the statistic will often carry a subscript , indicating that the statistic is of the random network with neighborhoods.

Formally, as explained in Schweinberger & Handcock (2015), the first class of statistics contains those that have the form wherea product of edge variables that captures the interaction desired. We will also make use of the set wich is a similar cartesian product. When we write , we mean the every component of the -tuple is an element of . Furthermore, by a catachrestic abuse of notation, we will write to mean that and are vertices contained in the -tuple . Now we are ready to prove the first case of the theorem.

Proof. As the networks are unweighted, all edge variables . Let . Then define . Therefore, without loss of generality, we may work with , which has the convenient property that . This means that we can similarly shift our statistics of interest, . Therefore, call , so that .

Note that we can write with andwhere the indicator functions restrict the sums to within the -th neighborhood and between neighborhoods of the graph, respectively. Specifically, when the -tuple of nodes contains nodes from different neighborhoods, or exactly when for all neighborhoods . By splitting the statistic into the within and between neighborhood portions, we are able to make use of the independence relation between edges that connect neighborhoods. We also have and , as each quantity is a sum of random variables with mean zero.

The idea of this proof is to gain control over the variances of and all the elements of the sequence . We can then show that is converging in probability to zero and that the triangular array satisifies Lyaponouv’s condition, and is thus asymptotically normal. Finally, Slutsky’s theorem allows us to extend the result to .

To bound the variance of , note that Despite independence, some of these covariances may be nonzero if the two terms of the statistic both involve the same edge. For example, in Figure , a statistic that counted the number of edges between gray nodes plus the number of edges between diamond shaped nodes would have a nonzero covariance term because of the edge between the two nodes that are both gray and diamond shaped. To show that, in the limit, these covariances vanish, we need only concern ourselves with the nonzero terms in the sum. That is, only those terms where . This happens exactly when both and involve a between neighborhood edge variable. So, note that we have as the expectation of each term is zero. Next we take to be one of the (possibly many) between neighborhood edge variables in this product (such that where is any tuple containing and ) and to be the recentered random variable corresponding to . Then, where we must consider the case where to account for the covariance of and when and the case where to account for the variance of , which is computed in the case where . So, if , then by the local dependence property and the assumption that . The local dependence property allows us to factor out the expectation of , as this edge is between neighborhoods, and therefore independent of every other edge in the graph. Now, if we have , then, by sparsity and the fact that the product below is at most , where D is a constant that bounds the expectation above. There exists such a constant because each of the V_{mn} are bounded by definiton, so a product of them is bounded. So as grows large, the between neighborhod covariances all become asymptotically negligible. Therefore, we can conclude that So we have Then, for all , Chebyshev’s inequality gives us so Next, we bound the within neighborhood covariances, as we also have As the covariance forms an inner product on the space of square integrable random variables, the Cauchy-Schwarz inequality gives us Then, as each has expectation zero, we know that As , we know for all tuples , so we have the bound Now all that remains is to apply the Lindeberg-Feller central limit theorem to the double sequence . To that end, first note that, as each neighborhood contains at most a finite number of nodes, , we can show that Now we prove that Lyaponouv’s condition (3.2) holds for the constant in the exponent . So where tends to infinity by assumption. Therefore, Lyaponouv’s condition holds, and so by the Lindeberg-Feller central limit theorem, we have, Slutsky’s theorem (3.4) gives the final result for . Then we have as desired.a product with at most terms.

exactly as before, incorporating the function into each as we did above. Then the binary nature of the graph and the uniform boundedness of allow us to once again recenter the , meaning that we will work with . We also have , so as well.

For the between neighborhood covariances, we once again choose , a between neighborhood network variable. Then we once again write by the local dependence property. Then, when , we have by assumption, so the covariance is identically zero. When we have by sparsity and almost surely by uniform boundedness and the fact this product has at most terms. This follows from the fact that is bounded by and that is bounded by some constant , by defintion. So which tends to zero as grows large. So, again by Chebyshev’s inequality, we have Next we bound the within neighborhood covariances. Now with each , we have Now, we show that Lyaponouv’s condition (3.2) holds for the same . Once again note that each neighborhood has at most nodes, so Then Lyaponouv’s condition is Therefore, by the Lindeberg-Feller central limit theorem and Slutsky’s theorem, we haveFinally, the last class of statistic is that which depends only on the nodal variates. This result follows directly from a central limit theorem for -dependent random variables, which can be found in Billingsley (1995, p. 364). Establishing this theorem requires that we assume that the statistic in question depends only on a single variable across nodes. Therefore we assume that the statistic depends only on a single nodal covariate.

In practice, the hypothesis that the twelfth moment exists is satisfied for most reasonable distributional assumptions about nodal covariates. Furthermore, the assumption that all nodal variates have expectation zero can easily be satisfied by recentering the observed data. Finally, the delta method gives us an asymptotically normal distribution for a differentiable statistic of the nodal variate. The univariate nature of the statistic is a fundamental limitation of this approach, however I am unable to find an analogous multidimensional central limit theorem that would allow us to establish the asymptotic normality of a statistic of multiple nodal variates.

References

Fellows, I., & Handcock, M. S. (2012). Exponential-family random network models. ArXiv Preprint ArXiv:1208.0121.

Schweinberger, M., & Handcock, M. S. (2015). Local dependence in random graph models: Characterization, properties and statistical inference. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 77(3), 647–676.

Billingsley, P. (1995). Probability and measure (3. ed., authorized reprint). New Delhi: Wiley India.

Shalizi, C. R., & Rinaldo, A. (2013). Consistency under sampling of exponential random graph models. The Annals of Statistics, 41(2), 508–535. http://doi.org/10.1214/12-AOS1044